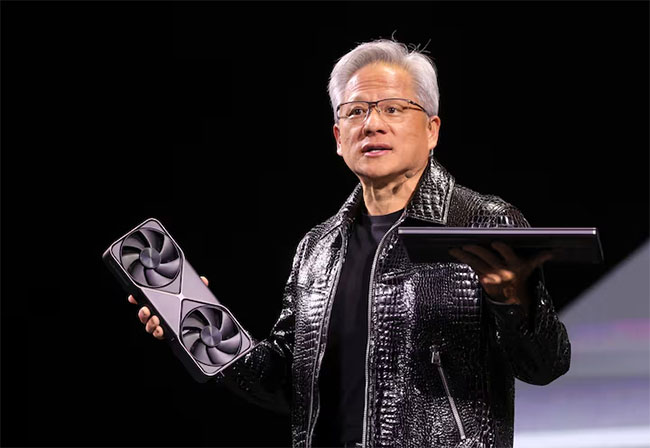

Nvidia launches Vera Rubin, its next major AI platform, at CES 2026

January 6, 2026 04:55 pm

AI powerhouse Nvidia (NVDA) announced the launch of its next-generation Vera Rubin superchip at CES 2026 on Monday in Las Vegas. One of six chips that make up what Nvidia is now calling its Rubin platform, Vera Rubin combines one Vera CPU and two Rubin GPUs in a single processor.

Nvidia is framing the Rubin platform as ideal for agentic AI, advanced reasoning models, and mixture-of-experts (MoE) models, which combine a series of “expert” AIs and route queries to the appropriate one depending on the question a user asks.

“Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof,” Nvidia CEO Jensen Huang said in a statement.

“With our annual cadence of delivering a new generation of AI supercomputers — and extreme codesign across six new chips — Rubin takes a giant leap toward the next frontier of AI.”

In addition to the Vera CPU and Rubin GPUs, the Rubin platform includes four other networking and storage chips: the Nvidia NVLink 6 Switch, Nvidia ConnectX-9 SuperNIC, Nvidia BlueField-4 DPU, and Nvidia Spectrum-6 Ethernet Switch.

All of that can then be packaged together into Nvidia’s Vera Rubin NVL72 server, which combines 72 GPUs into a single system. Join several NVL72s together, and you get Nvidia’s DGX SuperPOD, a kind of massive AI supercomputer. These huge systems are what hyperscalers like Microsoft, Google, Amazon, and social media giant Meta are spending billions of dollars to get their hands on.

Nvidia is also touting its AI storage, called Nvidia Inference Context Memory Storage, which the company says is necessary to store and share data generated by trillion-parameter and multi-step reasoning AI models.

All of this is meant to make the Rubin platform more efficient than Nvidia’s previous-generation Grace Blackwell offering.

According to the company, Rubin will result in a 4x reduction in the number of GPUs needed to train the same MoE versus Blackwell systems.

Cutting down on the number of GPUs means companies would instead be able to put the excess units to work performing different tasks, improving efficiency. Nvidia says Rubin also provides a 10x reduction in inference token costs.

Tokens in AI models represent things like words, parts of sentences, images, and videos. Models use tokens to break down these concepts into more easily processable pieces via tokenization.

But processing tokens is a resource-intensive, and therefore energy-hungry, process, especially when you’re dealing with enormous AI models. Cutting down on the cost of tokens could provide improved total cost of ownership for Rubin versus its predecessors.

Nvidia says it’s been sampling the Rubin platform with partners and is in full production.

Nvidia’s chip lead has made it the world’s largest company by market cap with a valuation of roughly $4.6 billion. In October, its market cap swelled to more than $5 trillion, but concerns over AI spending and persistent fears about an AI ecosystem bubble brought its valuation down to its current price.

The company is also facing more competition from rival AMD, which is launching its own Helios racks-scale systems to compete with the NVLink 72, as well as its own customers.

In October, Google and Amazon announced that Anthropic will expand the use of their respective custom processors, which the AI company already uses to power its Claude platform. Both Google and Amazon have stakes in Anthropic.

And according to The Information, Google is having conversations with Meta (META) and other cloud companies about use of the search giant’s chips in their data centers.

Still, neither AMD nor its own customers are likely to unseat Nvidia from its AI throne anytime soon. And if it’s able to continue its yearly release schedule, it will be even more difficult for them to catch up.

Source: Yahoo

--Agencies